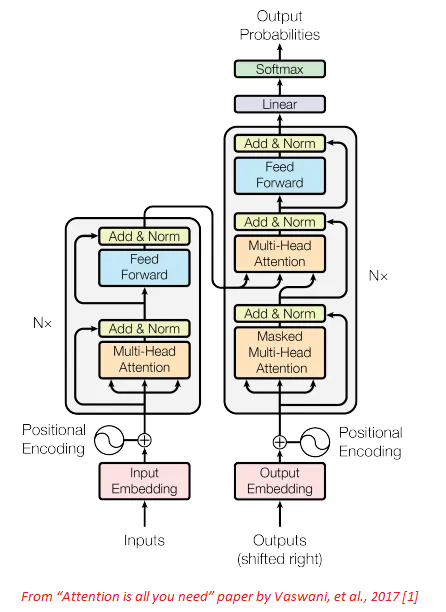

Attention Is All You Need – transformer architecture

“Attention Is All You Need” is a research paper that introduced the transformer architecture, which is a type of neural network that has become popular for natural language processing (NLP) tasks.

The paper was authored by Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin, and was published in 2017.

The transformer architecture was introduced as an alternative to traditional recurrent neural networks (RNNs) and convolutional neural networks (CNNs) for NLP tasks. The authors of the paper observed that RNNs and CNNs have limitations when it comes to capturing long-term dependencies in language, which are important for many NLP tasks. The transformer architecture uses a mechanism called attention to capture long-term dependencies in language.

Attention allows the model to focus on the most relevant parts of the input sequence, rather than relying solely on the sequence order as in RNNs and CNNs. This mechanism has been shown to be particularly effective for tasks such as machine translation, where the model needs to consider the entire input sequence to generate an accurate translation.

Attention allows the model to focus on the most relevant parts of the input sequence, rather than relying solely on the sequence order as in RNNs and CNNs. This mechanism has been shown to be particularly effective for tasks such as machine translation, where the model needs to consider the entire input sequence to generate an accurate translation.

The transformer architecture has become a popular choice for NLP tasks, and has been used in a variety of applications, such as language modelling, text classification, question-answering, and summarization. The success of the transformer architecture has led to the development of pre-trained language models such as BERT, GPT, and RoBERTa, which have achieved state-of-the-art performance on a variety of NLP tasks.

The transformer architecture has found applications in various natural language processing (NLP) tasks, some of which are:

- Machine Translation: The transformer architecture has been used to build state-of-the-art machine translation models. For example, the transformer-based model used in Google Translate has achieved impressive results in translating text between different languages.

- Text Summarization: The transformer architecture has been used to build models that can summarize long documents or articles into shorter summaries. These models use the attention mechanism to identify important sentences or phrases in the input document and generate a summary that captures the main points.

- Question Answering: The transformer architecture has been used to build models that can answer questions posed in natural language. These models use the attention mechanism to identify relevant parts of the input document and generate an answer that addresses the question.

- Language Modelling: The transformer architecture has been used to build large pre-trained language models, such as BERT and GPT, which can be fine-tuned for specific NLP tasks. These models have achieved state-of-the-art results on a wide range of NLP tasks, including sentiment analysis, named entity recognition, and text classification.

- Dialogue Generation: The transformer architecture has also been used to build models that can generate coherent and contextually appropriate responses in dialogue systems. These models use the attention mechanism to consider the context of the conversation and generate responses that are relevant and natural sounding.

The success of the transformer architecture has led to the development of new models and techniques that build on its strengths, and it is expected to continue to be a key tool in the field of NLP and beyond.